|

|

Using Multiple blocksizes in Oracle

Oracle Tips by Burleson Consulting Updated

March 24, 2008 |

Update:

Oracle is always changing, and for the latest consensus on using multiple blocksizes in Oracle, see

the latest

research on multiple blocksizes.

The introduction of

Oracle 9i brought an amazing amount of complexity to the Oracle database engine.

Oracle introduced many new internal features, including bitmap free lists, redo

log based replication, dynamic SGA,

and perhaps the most important feature of all, the ability to support multiple

block sizes.

WARNING: Using

multiple blocksizes effectively is not simple. It requires

expert-level Oracle skills and an intimate knowledge of your I/O landscape.

While deploying multiple blocksizes can greatly reduce I/O and improve

response time, it can also wreak havoc in the hands of inexperienced DBA's.

Using non-standard blocksizes is not recommended for beginners.

At first, beginners denounced multiple block sizes because

they were invented to support transportable tablespaces. They did not know

that multiple block sizes have been used successfully in other DBMS systems

(IDMS, DB2) since the 1980's, and that multiple blocksizes are a proven

technology. Fortunately, Oracle has codified the benefits of multiple

blocksizes, and the

Oracle 11g Performance Tuning guide notes that multiple blocksizes are

indeed beneficial in large databases to eliminate superfluous I/O and isolate

critical objects into a separate data buffer cache:

?With segments that have atypical

access patterns, store blocks from those segments in two different buffer

pools: the KEEP pool and the RECYCLE pool. A segment's access pattern may be

atypical if it is constantly accessed (that is, hot) or infrequently

accessed (for example, a large segment accessed by a batch job only once a

day).

Multiple buffer pools let you address

these differences. You can use a KEEP buffer pool to maintain frequently

accessed segments in the buffer cache, and a RECYCLE buffer pool to prevent

objects from consuming unnecessary space in the cache. . .

By allocating objects to appropriate

buffer pools, you can:

- Reduce or

eliminate I/Os

- Isolate or

limit an object to a separate cache"

In general, different blocksizes can improve performance in a variety

of ways:

- Contention reduction - small rows in a large block perform worse

under heavy DML than large rows in a small blocksize.

- Faster updates - Heavy insert/update tables can see faster

performance when segregated into another blocksize which is mapped to a

small data buffer cache. Smaller data buffer caches often see faster

throughput performance.

- Reduced Pinging - RAC can perform far faster with smaller

blocksizes, reducing cache fusion overhead.

- Less RAM waste - Moving random access small row tables to a

smaller blocksize (with a corresponding small blocksize buffer) will reduce

buffer waste and improve the chance that other data blocks will remain in

the cache.

- Faster scans - Tables and indexes that require full scans can see

faster performance when placed in a large blocksize

For a complete treatment of using multiple blocksize to

improve I/O performance and manageability, see my book

"Oracle

Tuning: The Definitive Reference".

When you strip away all of

the advanced features, Oracle's job is to deliver data, and the management of

disk I/O is a very critical component and tuning of any Oracle database.

Anything that can be done to reduce the amount of disk I/O is going to have a

positive impact on the throughput of the Oracle database system.

If we take a look at the

various tuning activities within Oracle database, will see that the common goal

of almost Oracle tuning is the directed and immediate goal of reducing disk

I/O. For example, tuning an SQL statement to remove a full table scans makes the

query run faster because of the direct reduction in the amount of data blocks

that are read from the disk. Adjusting instance tuning parameters such as

DB_CACHE_SIZE also has the goal of reducing the amount of disk overhead.

To understand how using

multiple block sizes can improve performance of the Oracle database, we first

have to start by taking a look at the basic nature of disk I/O.

Understanding Oracle physical disk

I/O metrics

Anytime an

Oracle data block is accessed from disk, we commonly see three sources of delay.

The first and most important source of delay is the read-write head movement

time. This is the time required for the read-write head to position itself under

the appropriate cylinder. We also see rotational delay as the read-write head

waits for the desired block the past beneath it. The third source of delay

is the data transmission time from the disk back to the Oracle SGA.

If we accept the premise

that 99 percent of the latency is incurred prior to actually accessing the

desired data block, then it makes sense that the marginal cost for reading a 32K

block is not significantly greater than the cost of reading a 2K block. In other

words, the amount of disk delay is approximately the same regardless of the size

of the block. Therefore it should follow that the larger the block you can

read in on a single I/O, the less overall I/O will be performed on the Oracle

database.

The principle behind

caching is not unique to Oracle databases. Access for RAM is measured in

nanoseconds, while access from disk is generally measured in milliseconds. This

amounts to a to an order of magnitude improvement in performance if we can get

the Oracle data block into a RAM buffer. As Oracle grows more sophisticated and RAM becomes cheaper, we tend

to see Oracle databases with system global areas (SGA) that commonly exceed 10

GB. This has important ramifications for the performance of the Oracle database

because once read, the Oracle data blocks reside in RAM where they can be

accessed tens of thousands of times faster than having to go to disk in order to

retrieve the data block.

RAM buffers and Oracle

data access Oracle has always provided RAM data buffers to hold incoming data blocks, and data can be

read from the buffers 14,000 times faster than reading the data block from disk.

The RAM data buffer has evolved from a single buffer in Oracle7 to three data

buffers in Oracle8i. These were known as the KEEP pool, the RECYCLE pool, and

the DEFAULT pool (refer to figure 1).

Figure 1 - The Oracle8 data buffers

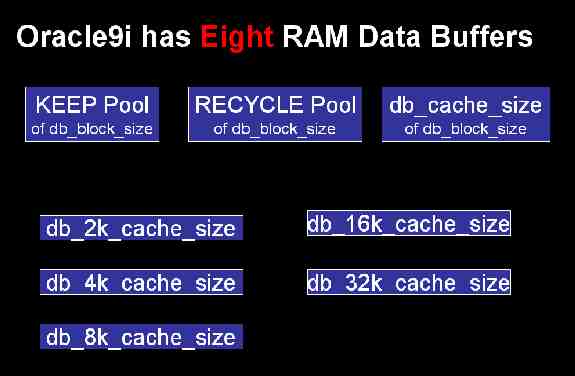

In Oracle we

still have the three data buffers, but we also have the ability to create a data

buffer for every supported blocksize for the Oracle server (refer to figure 2).

Figure 2 - The eight data buffers for Oracle

Within each data buffer, the data buffer hit ratio measures the propensity of a

data block to be in RAM memory. It is the job of the Oracle administrator to

allocate RAM pages among the data buffers to ensure the optimal amount of RAM

caching. With small buffers, a marginal increase of pages results in superior

caching (refer to figure 3).

Figure 3 - RAM pages added to a small data buffer

As

the RAM cache is increased, the marginal benefit from adding pages decreases

(refer to figure 4).

Figure 4 - The marginal decrease of disk I/O with large data buffers

Indexes and Large Data Blocks

Prior to Oracle, Oracle

professionals noticed that by moving the entire database to a larger block size,

they reduce disk I/O improve the performance of the entire system. This is

somewhat counterintuitive, and people ask "if I only need an 80-byte row, where

do I get the benefit of reading 16K block?"

The answer has to do with

indexes. Most well-tuned Oracle database have index based roughly equal to the

space of the table data. There's no question of a large block size for indexes

is going to reduce I/O, and therefore improve the overall performance of the

entire database.

For most databases, creating multiple blocksizes is not

going to make a measurable difference, and the deployment of multiple blocksizes

must be carefully evaluated on a case-by-case basis. The DBA must carefully evaluate their database

for I/O patterns and buffer efficiency to see if

multiple blocksizes are right for their unique system.

While it is generally accepted that multiple

blocksizes are not for every shop, they may be appropriate for

large multi-terabyte databases where the DBA wants to incur the

additional monitoring complexity to be able to control their I/O

buffers at a lower-level of granularity.

For example, insert-intensive databases will perform less

write I/O (via the DBWR process) with larger block sizes. This is because

more "logical inserts" can take place within the data buffer before the block

becomes full and requires writing it back to disk. Some shops define their

high-insert tablespaces in a larger blocksize to minimize I/O, and some use SSD

to achieve insert speeds of over 10,000 rows per second.

For a more complete discussion, see my

Oracle Multiple blocksize tips.

For these very large, high activity databases, some DBA's will choose

to create a 32K

tablespace, a corresponding 32K data buffer, and then migrate all of the indexes

(but only those that experience multiblock reads) from their existing blocks into the 32K tablespace. Upon having

done this, the Oracle database can read a significant amount of index note

branches in a single disk I/O, thereby reducing stress on the system and

improving overall performance.

Allocating Objects into Multiple Block

Buffers

So given that we have the

ability to create multiple data buffers within the Oracle database, how do we

decide what data that we want to put each of these data buffers?

Let's start with some of

the more common techniques.

Segregate large-table full-table scans

- Tables that experience large-table full-table scans will benefit from the

largest supported block size and should be placed in a tablespace with your

largest block size, setting db_file_multiblock_read_count according to this

blocksize.

Set db_recycle_cache_size carefully

- If you are not setting db_cache_size to the largest supported block size for

your server, you should not use the db_recycle_cache_size parameter. Instead,

you will want to create a db_32k_cache_size (or whatever your max is), and

assign all tables that experience frequent large-table full-table scans to the

largest buffer cache in your database.

The Data Dictionary uses the default cache

- You should ensure that the data dictionary (e.g. your SYSTEM tablespace) is

always fully cached in a data buffer pool. Remember, the block size of the data

dictionary is not as important as ensuring that the data buffer associated with

the SYSTEM tablespace has enough RAM to fully-cache all data dictionary blocks.

Segregate Indexes

- in many cases, Oracle SQL statements will retrieve index information via an

index range scan, scanning the b-tree or bitmap index for ranges of values that

match the SQL search criteria. Hence, it is beneficial to have as much of an

index residing in RAM as possible.

Segregate random access

reads - For those

databases that fetch small rows randomly from the disk, the Oracle DBA can

segregate these types of tables into 2K Tablespaces. We have to remember that

while disk is becoming cheaper every day, we still don't want to waste any

available RAM by reading in more information to RAM than the number actually going be

used by the query. Hence, many Oracle DBAs will use small block size is in cases

of tiny, random access record retrieval.

Segregate LOB column

tables - For those

Oracle tables that contain raw, long raw, or in-line LOBs, moving the table rows

to large block size will have an extremely beneficial effect on disk I/O.

Experienced DBAs will check dba_tables.avg_row_len to make sure that the

blocksize is larger than the average size. Row chaining will be reduced while at

the same time the entire LOB can be read within a single disk I/O, thereby

avoiding the additional overhead of having Oracle to go out of read multiple

blocks.

Check the average row

length - The block

size for a tables' tablespace should always be greater than the average row

length for the table (dba_tables.avg_row_len). Not it is smaller than the

average row length, rows chaining occurs and excessive disk I/O is incurred.

Tools for Viewing Data Buffer Usage

The process of segregating

Oracle objects into separate data buffers is fairly straightforward and Oracle

provides tools to assist in this effort. Many Oracle administrators are not

aware of those table blocks, which consume a disproportional amount of data space

within the data buffer caches, and Oracle provides numerous scripts to allow

you to see which objects reside most frequently within the data cache.

The query below counts the

number of blocks for all segments that reside in the buffer cache at that point

in time. Depending on your buffer cache size, this could require a lot of sort

space.

column

object_name format a40

column number_of_blocks format 999,999,999,999

column object_name format a40

column number_of_blocks format 999,999,999,999

SELECT

o.object_name,

COUNT(1) number_of_blocks

FROM

DBA_OBJECTS o,

V$BH bh

WHERE

o.object_id = bh.objd

AND

o.owner != 'SYS'

GROUP BY

o.object_name

ORDER BY

count(1) desc;

Here we see the object

name and the number of data blocks in the buffer.

OBJECT_NAME NUMBER_OF_BLOCKS

---------------------------------------- ----------------

ORDER_TABLE 123,273

ORDER_IDX 112,492

CUSTOMER 83,272

. . .

OEM_EXT 701

Creating Separate Data Buffers

The process of assigning

table or index blocks into named block size tablespaces is very straightforward

within Oracle.

The first step is to set a

database cache to correspond to that block size. Remember, with Oracle 9i we no

longer have to use the init.ora file, and we can create the named cache

dynamically with an alter database statement.

alter system set db_2k_cache_size=200M;

alter system set db_4k_cache_size=500M;

alter system set db_8k_cache_size=800M;

alter system set db_16k_cache_size=1600M;

We begin by creating a

tablespace and using the new blocksize parameter in the create tablespace

syntax. In the example below we create a 32K tablespace within the Oracle

database.

create tablespace

db_32k_tablespace

datafile

'/u01/oradata/mysid/32k_file.dbf'

size

100M

blocksize

32k

;

Once we've created the

named RAM buffer, and the tablespace, we are now ready to migrate the Oracle

objects into the new tablespace. There a variety of methods for moving objects

from one tablespace to another, and many Oracle administrators are already

familiar with using the create table as select or CTAS syntax in order to move the table. For indexes, the alter

index rebuild command can be used to quickly migrate an index tree between

tablespaces.

Conclusion

Out of all of the

sophisticated enhancements of Oracle, many experienced DBAs consider multiple

block sizes to be the most important feature for tuning the Oracle database. The

Oracle database administrator now has up to seven separate and distinct data

pools that they can manage and control, giving the Oracle DBA a huge amount of

control over the amount of data buffer blocks that can be assigned to specific

database objects. Through judicious inspection all of buffer access

characteristics, overall disk I/O can be tremendously reduced, and the

performance of the database will be greatly improved.

|

If you like Oracle tuning, see the book "Oracle

Tuning: The Definitive Reference", with 950 pages of tuning tips and

scripts.

You can buy it direct from the publisher for 30%-off and get

instant access to the code depot of Oracle tuning scripts. |