| |

|

|

Oracle hash joins tips

Oracle Tips by Burleson Consulting

Don Burleson

|

The following is from the bestselling book

Oracle 10g Grid & Real Application

Clusters and the book "Oracle

Tuning: The Definitive Reference". Enabling Oracle to perform hash joins

In cases where a very small table is being

joined to a large table, the Oracle hash

join will often dramatically speed-up

the query. Hash joins are far faster

than nested loop joins in certain cases,

often in cases where your SQL is joining a large table to a small table.

However, in a production database with very large tables, it is not

always easy to get your database

to invoke hash joins without increasing

the RAM regions that control hash joins. For large tables, hash joins

requires lots of RAM.

The 200 megabyte limit for hash joins

Oracle places limits of the amount of RAM

available for hash joins so that no single session will "hog" all of the RAM.

According to

this

research using Oracle 10g, the hash join maximum is only to only 200 megabytes (5% of

pga_aggregate_target).

The Oracle DBA controls the optimizers' propensity to invoke

hash joins because the DBA must allocate the RAM resources to Oracle (using the

hash_area_size and pga_aggregate_target parameters) for the

optimizer to choose a hash join. The CBO will only choose a hash join if

you have allocated Oracle enough RAM area in which to perform the hash join.

Sizing your PGA for hash joins

The rules are quite

different depending on your release, and

you need to focus on the

hash_area_size OR the

pga_aggregate_target parameters.

Unfortunately, the Oracle hash join is more memory intensive than a nested loop

join. The default value for hash_area_size is derived as:

2* sort_area_size

Note: You may not manipulate the default value

for the hash_area_size since 10g Release 2.

This is described in the Oracle Document with the MOSC

Note ID

396009.1

If the Oracle hash join overflows the

hash_area_size memory, the hash join

will page into the TEMP tablespace,

severely degrading the performance of

the hash join.

In addition to seeing the hash_area_size,

we must also be able to adjust the

degree of parallelism in cases where we

use a full-table scan to access the

tables in a hash join.

Laurent Schneider notes in Oracle MOSC that

overriding the PGA defaults made a large batch processes run more than 8x

faster with a use_hash hint:

"I set appropriate values for pga_aggregate_target

and _pga_max_size...

alter system set

pga_aggregate_target=6G;

alter system set "_pga_max_size"=2000000000;

...and I gave the query some hints "NOREWRITE FULL USE_HASH ORDERED".

As a result, it boosted my query performance from 12 hours to 1.5 hour."

Note: Always consult Oracle Technical Support before using

any undocumented parameters.

Ah, if only it were that

easy, just change a setting and batch jobs run six times faster.

Laurent Schneider notes some perils and reliability issues relating to

this parameter and says "this parameter often leads to an

ORA-4030,

even when plenty of memory available, for some obscure reasons".

PGA

usage Note:

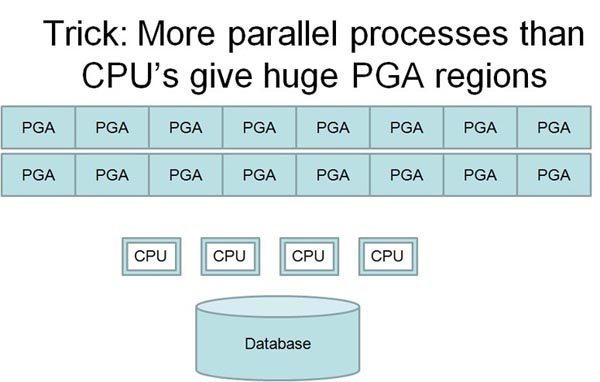

There are other tricks for overcoming the built-in

governor for PGA usage. Oracle

has a 5% limit for any individual process, and by using parallel DML any

single batch job can consume 30% of the PGA without touching any of the

undocumented parameters.

Oracle author

Laurent Schneider noted:

"I finally

opted for a more maintainable solution.

No more hints, no more undocumented parameter, but parallel

processing up to 16 threads on a 4 CPU server.

As discussed in

MOSC thread 460157.996, a supported way to increase the

maximum PGA memory per single SQL query is to increase the degree of

parallelism."

While Laurent abandoned

the undocumented approach, the promise of eight times faster execution

speeds are very tempting. Once you get permission from Oracle

Technical Support to set an undocumented parameter, they can work with

to resolve errors. While they may not address bugs, they may be able

to provide alternatives and workarounds.

This short tip does not have complete

information about the hash join, but you

can get complete information from book "Oracle

Tuning: The Definitive Reference".

When hash joins fail

The hash join is very finicky,

and there are many conditions that must

be satisfied. It is not uncommon to find

that a use_hash hint is ignored, and

here are some common causes of this

problem.

-

Check hash join parameters

- Make sure

that you have the proper settings for

optimizer_index_cost_adj and optimizer_max_permutations

(Oracle9i and

earlier) to limit the number of table

join evaluations. Also check your values

for hash_area_size and hash_multiblock_io_count. If using

pga_aggregate_target, remember that it

will not use more than 5% of the space

for any hash join and you may need to

override it.

- Verify the hash

join driving table

-

Make sure that the smaller table is the

driving table (the first table in the

from clause when using the ordered

hint). This is because a hash join

builds the memory array using the

driving table.

- Analyze CBO statistics

-

Check that tables and/or columns of the

join tables are appropriately analyzed

with dbms_stats.

- Check for skewed columns

-

Histograms are recommended only for

non-uniform column distributions. If

necessary, you can override the join

order chosen by the cost-based optimizer

using histograms or the ordered hint.

- Check RAM region - Ensure

that hash_area_size is large enough to

hold the smaller table in memory.

Otherwise, Oracle must write to the TEMP

tablespace, slowing down the hash join.

Oracle recommends that the

hash_area_size for the driving table be

set at 1.6 times the sum of bytes for

the driving table, and you can use the

hash_area.sql script to set the

hash_area_size for your query session.

If you have followed these steps, you

should be able to add a use_hash hint

ton your SQL to invoke a hash join.

Remember, it is always a good idea to

run a explain plan on your SQL to ensure

that the hash join is being invoked.

Tracking hash joins

Because hash joins are so tightly controlled by available

memory, the savvy DBA might

track hash joins over

time. You can use SQL scripts to track system-wide hash joins.

select

to_char(

sn.begin_interval_time,

'yy-mm-dd

hh24'

)

snap_time,

count(*)

ct,

sum(st.rows_processed_delta)

row_ct,

sum(st.disk_reads_delta)

disk,

sum(st.cpu_time_delta)

cpu

from

dba_hist_snapshot

sn,

dba_hist_sqlstat

st,

dba_hist_sql_plan

sp

where

See code depot for full hash sizing

scripts

st.snap_id

= sn.snap_id

and

st.dbid = sn.dbid

and

st.instance_number = sn.instance_number

and

sp.sql_id = st.sql_id

and

sp.dbid = st.dbid

and

sp.plan_hash_value = st.plan_hash_value

and

sp.operation = 'HASH JOIN'

group

by

to_char(sn.begin_interval_time,'yy-mm-dd

hh24')

having

count(*) > &hash_thr;

The sample output might look the following, showing the number of

hash joins during the snapshot period along with the relative I/O

and CPU associated with the processing. The values for

rows_processed are

generally higher for hash joins which do full-table scans as opposed

to nested loop joins with generally involved a very small set of

returned rows.

Hash Join Thresholds

by hour

Hash

Join Rows Disk CPU

Date Count Processed Reads Time

-------------------- ----------- ----------- -----------

04-10-12 17 22 4,646 887 39,990,515

04-10-13 16 25 2,128 827 54,746,653

04-10-14 11 21 17,368 3,049 77,297,578

04-10-21 15 60 2,805 3,299 5,041,064

04-10-22 10 25 6,864 941 4,077,524

04-10-22 13 31 11,261 2,950 46,207,733

04-10-25 16 35 46,269 1,504 6,364,414

Oracle hash joins are dependent upon your

system and session parameter settings.

See my research on Oracle hash joins here:

|

If you like Oracle tuning, see the book "Oracle

Tuning: The Definitive Reference", with 950 pages of tuning tips and

scripts.

You can buy it direct from the publisher for 30%-off and get

instant access to the code depot of Oracle tuning scripts. |

|

|

|